I am a PhD candidate in Computational Linguistics at Stony Brook University, affiliated with Institute for Advanced Computational Science (IACS). I am fortunate to be advised by Dr. Owen Rambow.

Prior to coming to Stony Brook, I completed a bachelor's degree in Chinese Language and Literature from Hunan University, and a master's degree in Applied linguistics from University of Saskatchewan.

I am a lifelong learner at heart, and as a result my research interests have evolved over time (ever since senior high school back in 2015). Currently, my work focuses on evaluating large (vision/audio/omni) language models from a human-centric perspective, spanning themes such as multi-step and agentic reasoning, human–AI interaction, personalization, multimodality, and post-training. I prioritize research grounded in real-world use cases and open-ended challenges, with an emphasis on scalable and interpretable evaluation methodologies.

I enjoy making new connections, so feel free to reach out to me for any reason and have a coffee chat!

News

-

Jan, 2026: Launched a new Blogs section on my website, featuring various essays (mostly Chinese with English translations by GPT-5.2) I wrote between 2015 and 2019. I hope this will motivate me to write more going forward.

-

Aug, 2025: I started working for Amazon as an Applied Scientist intern, researching Large Audio Language Models for Alexa AI, developing audio integration methods for user satisfaction prediction, and fine-tuning LALMs and Omni models to reduce modality gaps.

-

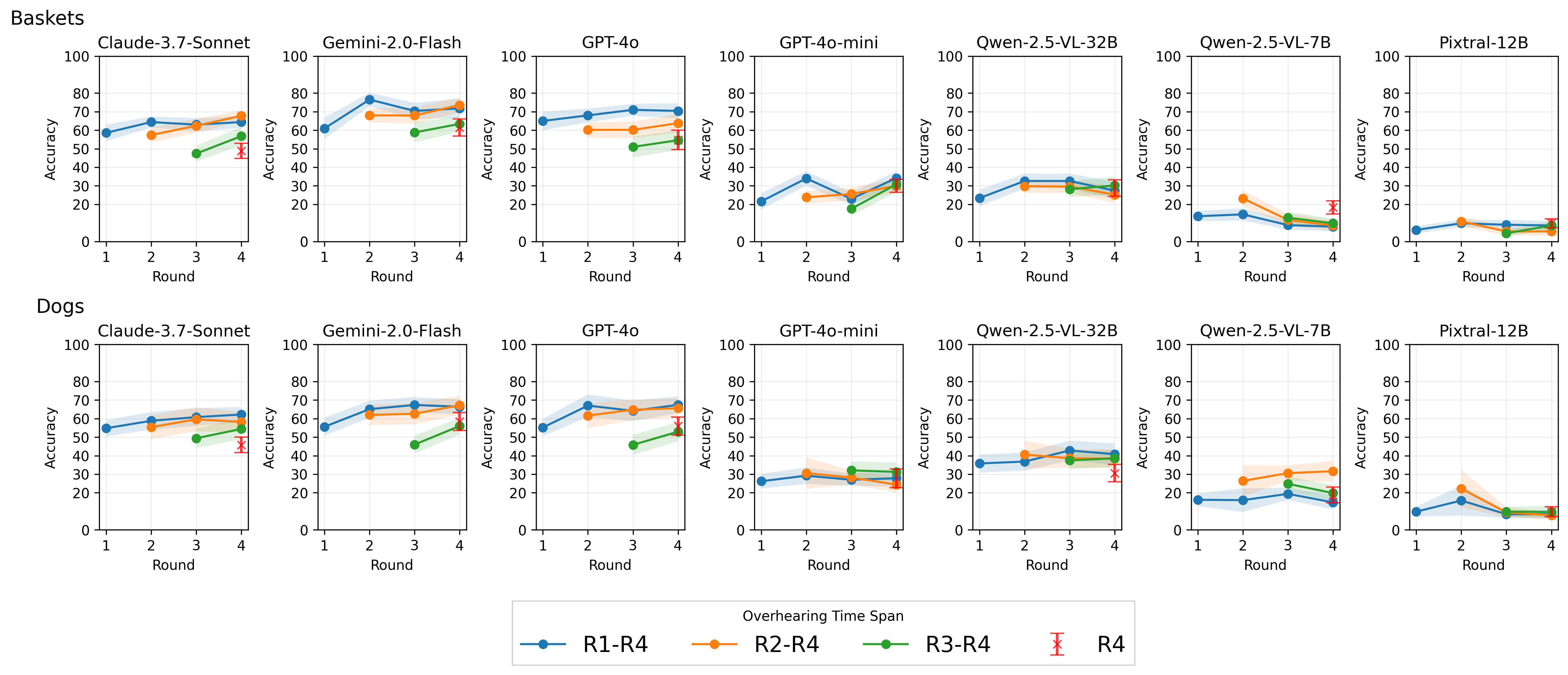

Aug, 2025: My first-authored paper LVLMs are Bad at Overhearing Human Referential Communication has been accepted to EMNLP 2025 (Main).

-

Aug, 2025: My first-authored paper Catch Me If You Can? Not Yet: LLMs Still Struggle to Imitate the Implicit Writing Styles of Everyday Authors has been accepted to EMNLP 2025 (Findings).

-

May, 2025: I started working for Meta as a Software Engineer (Machine Learning) intern. Using Hack (PHP), I built an LVLM-based agentic workflow for trend detection, validation, magnitude labeling, and post content quality rating.

-

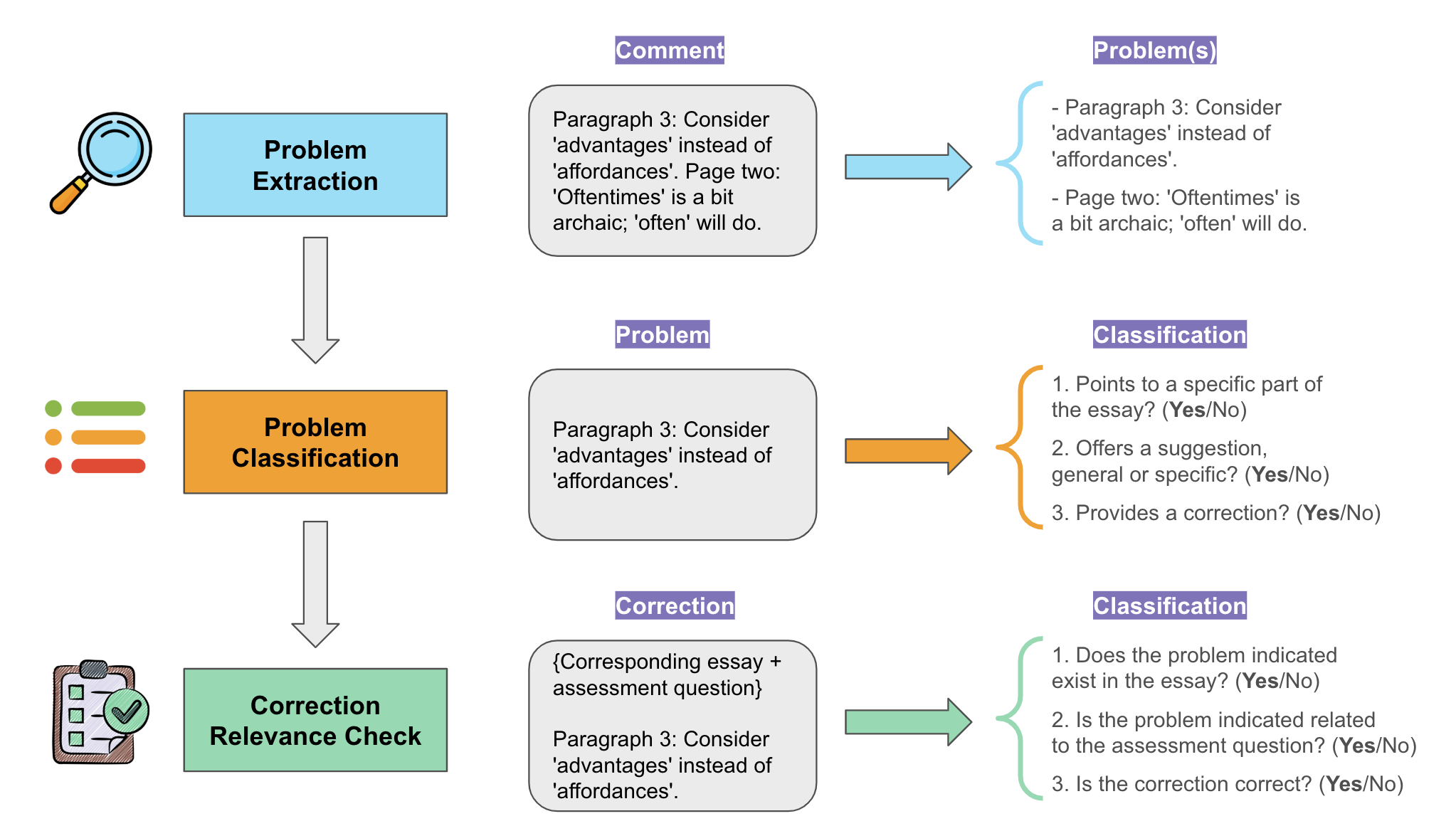

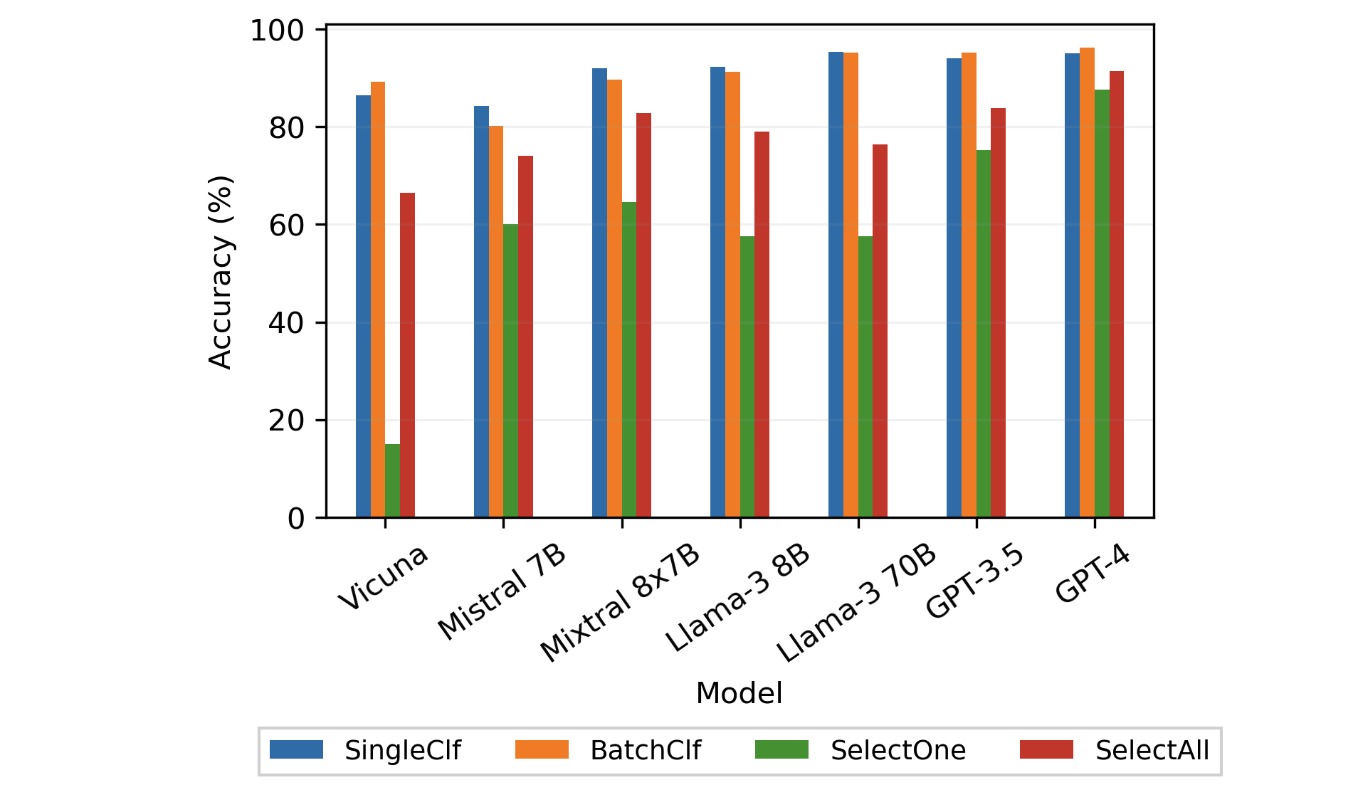

May, 2025: My first-authored paper LLMs can Perform Multi-Dimensional Analytic Writing Assessments: A Case Study of L2 Graduate-Level Academic English Writing has been accepted to ACL 2025 (Main).

-

Feb, 2025: I advanced to PhD candidacy after passing my second qualifying paper.

-

Aug, 2024: I received the Junior Researcher Award from the Institute for Advanced Computational Science at Stony Brook University.

-

May, 2024: I started working for the Home Depot as a Data Scientist intern where I developed various LLM-based systems for topic modeling, classification, and validation. See this blog post for details.

-

June, 2023: I became a trainee for the Bias-NRT (National Science Foundation Research Traineeship) program at Stony Brook University,

-

Aug, 2022: I started my PhD in Computational Linguistics at Stony Brook University.